Bas Steunebrink on Self-Reflective Programming

Bas Steunebrinkis a postdoctoral researcher at the Swiss AI lab IDSIA, as part ofProf. Schmidhuber’sgroup. He received his PhD in 2010 from Utrecht University, the Netherlands. Bas’s dissertation was on the subject of artificial emotions, which fits well in his continuing quest of finding practical and creative ways in which general intelligent agents can deal with time and resource constraints. A recentpaperon how such agents will naturally strive to be effective, efficient, and curious was awarded theKurzweil Prizefor Best AGI Idea at AGI’2013. Bas also has a great interest in anything related to self-reflection and meta-learning, and all “meta” stuff in general.

Bas Steunebrinkis a postdoctoral researcher at the Swiss AI lab IDSIA, as part ofProf. Schmidhuber’sgroup. He received his PhD in 2010 from Utrecht University, the Netherlands. Bas’s dissertation was on the subject of artificial emotions, which fits well in his continuing quest of finding practical and creative ways in which general intelligent agents can deal with time and resource constraints. A recentpaperon how such agents will naturally strive to be effective, efficient, and curious was awarded theKurzweil Prizefor Best AGI Idea at AGI’2013. Bas also has a great interest in anything related to self-reflection and meta-learning, and all “meta” stuff in general.

Luke Muehlhauser: One of your ongoing projects has been a Gödel machine (GM) implementation. Could you please explain (1) what a Gödel machine is, (2) why you’re motivated to work on that project, and (3) what your implementation of it does?

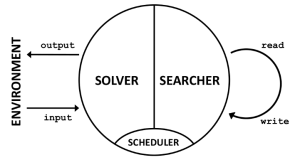

Bas Steunebrink: A GM is a program consisting of two parts running in parallel; let’s name them Solver and Searcher. Solver can be any routine that does something useful, such as solving task after task in some environment. Searcher is a routine that tries to find beneficial modifications to both Solver and Searcher, i.e., to any part of the GM’s software. So Searcher can inspect and modify any part of the Gödel Machine. The trick is that the initial setup of Searcher only allows Searcher to make such a self-modification if it has a proof that performing this self-modification is beneficial in the long run, according to an initially provided utility function. Since Solver and Searcher are running in parallel, you could say that a third component is necessary: a Scheduler. Of course Searcher also has read and write access to the Scheduler’s code.

AI: A Modern Approach

AI: A Modern Approach Richard Posner

Richard Posner Dr. Ben Goertzel is Chief Scientist of financial prediction firm

Dr. Ben Goertzel is Chief Scientist of financial prediction firm

Dear friends,

Dear friends, If you live near Boston, you’ll want to come see Eliezer Yudkowsky give a talk about MIRI’s research program in the spectacular Stata building on the MIT campus, onOctober 17th.

If you live near Boston, you’ll want to come see Eliezer Yudkowsky give a talk about MIRI’s research program in the spectacular Stata building on the MIT campus, onOctober 17th. In 1979, Michael Rabin

In 1979, Michael Rabin