Web开发人员MaciejCegłowski最近就AI安全发表了演讲(视频,,,,text) arguing that we should be skeptical of the standard assumptions that go into working on this problem, and doubly skeptical of the extreme-sounding claims, attitudes, and policies these premises appear to lead to. I’ll give my reply to each of these points below.

First, a brief outline: this will mirror the structure of Cegłowski’s talk in that first I try to put forth my understanding of the broader implications of Cegłowski’s talk, then deal in detail with the inside-view arguments as to whether or not the core idea is right, then end by talking some about the structure of these discussions.

(i) Broader implications

Cegłowski的主要关注点似乎是,有很多方法可以在短期内滥用AI,而担心长期AI危害可能会分散注意力的注意力短期滥用。他的次要关注似乎是,从外面看,担心AI风险似乎有问题。人类有悠久的传统千年主义,或者相信世界将在不久的将来从根本上改变。从历史上看,大多数千禧一代都被证明是错误的,并且以自我毁灭的方式行事。如果您认为不明飞行物很快就会降落去天堂,您可能会做出一些短视的财务决定,而当不明飞行物不到来时,您会感到遗憾。

我认为the fear that focusing on long-term AI dangers will distract from short-term AI dangers is misplaced. Attention to one kind of danger will probably help draw more attention to other, related kinds of danger. Also, risks associated with extraordinarily capable AI systems appear to be more difficult and complex than risks associated with modern AI systems in the short term, suggesting that the long-term obstacles will require more lead time to address. If it is as easy to avert these dangers as some optimists think, then we lose very little by starting early; if it is difficult (but doable), then we lose much by starting late.

关于外面视图的关注,我质疑我们只专注于外部现实可以学到多少human psychology。许多人认为他们可以出于某种原因飞行。但是有些人实际上可以飞行,而基于心理和历史错误模式(而不是在这种情况下,在物理和工程学领域的规律性上)下注对赖特兄弟的人会损失他们的钱。正确选择这些赌注的最佳方法是涉足凌乱的内部视图参数。

As a Bayesian, I agree that we should update on surface-level evidence that an idea is weird or crankish. But I also think that争论截止了权威的证据;if someone who looks vaguely like a crank can’t provide good arguments for why they expect UFOs to land in Greenland in the next hundred years, and someone else who looks vaguely like a crank can provide good arguments for why they expect AGI to be created in the next hundred years, then once I’ve heard their arguments I don’t need to put much weight on whether or not they initially looked like a crank. Surface appearances are genuinely useful, but only to a point. And even if we insist on reasoning based on surface appearances, I think those look pretty good.1

Cegłowski put forth 11 inside-view and 11 outside-view critiques that I’ll paraphrase and then address:

(ii) Inside-view arguments

1。羊毛定义的论点

许多关于在定义技巧上进行AI安全贸易的论点,其中句子“ A表示B”和“ B表示C”似乎很明显,这被用来主张不太明显的主张“ A表示C”;但是实际上,在前两个句子中,“ b”在两种不同的感觉中被使用。

对于那里的许多低级未来主义来说都是如此,但是我不知道Bostrom犯了这个错误的任何例子。长期AI安全工作的最佳论点取决于一些模糊的术语,因为我们对所涉及的许多概念没有良好的正式理解。但这与说论点基于模棱两可或模棱两可的术语不同。根据我的经验,如果我们阐明诸如“通用情报”之类的特定措辞,辩论的实质实际上不会改变太大。2

这basic idea is that human brains are good at solving various cognitive problems, and the capacities that make us good at solving problems often overlap across different categories of problem. People who have more working memory find that this helps with almost all cognitively demanding tasks, and people who think more quickly again find that this helps with almost all cognitively demanding tasks.

想出更好的solutions to cognitive problems also seems critically important in interpersonal conflicts, both violent and nonviolent. By this I don’t mean that book learning will automatically lead to victory in combat,3而是设计和瞄准步枪都是认知任务。在安全方面,我们已经看到人们正在开发AI系统,以便在程序中找到漏洞,以便可以修复它们。亚博体育苹果app官方下载对黑帽子的影响是显而易见的。

这里的人和计算机之间的核心差异似乎是,将认知工作的回报归功于计算机的能力更多的认知工作能力要高得多。人们可以学习东西,但具有有限的能力,可以提高他们学习东西的能力,或提高提高他们学习知识的能力等能力。对于计算机,似乎软件和硬件改进似乎更容易使给出更好的软件。和硬件选项。

使用计算机芯片制作更好的计算机芯片的循环已经比使用人员做更好的人的循环更令人印象深刻。我们只是从使用机器学习算法来制作更好的机器学习算法的循环开始,但是我们可以合理地期望这是另一个令人印象深刻的循环。

这里重要的要点是该论点的特定动态作品,而不是我使用的术语。某些解决问题的能力似乎比其他能力要笼统要多得多:无论认知功能使我们比老鼠在建造潜艇,粒子加速器和药品方面都必须进化以解决我们祖先环境中的一系列问题,当然也必须进化’t depend on distinct modules in the brain for marine engineering, particle physics, and biochemistry. These relatively general abilities look useful for things like strategic planning and technological innovation, which in turn look useful for winning conflicts. And machine brains are likely to have somedramatic advantages在生物学大脑上,部分原因是它们更易于重新设计(重新设计AI本身的任务本身可能是AI系统的授权),并且更容易扩展。亚博体育苹果app官方下载

2。斯蒂芬·霍金(Stephen Hawking)的猫的论点

斯蒂芬·霍金(Stephen Hawking)比猫聪明得多,但他并不擅长预测猫的行为,他的身体局限性强烈降低了他控制猫的能力。因此,超人AI系统(尤其是如亚博体育苹果app官方下载果它们不塑造)可能同样无效地建模或控制人类。

How relevant are bodies? One might think that a robot is able to fight its captors and run away on foot, while a software intelligence contained in a server farm will be unable to escape.

这对我来说似乎是不正确的,出于不夸张的原因。在现代经济中,互联网连接就足够了。One doesn’t need a body to place stock trades (as evidenced by the army of algorithmic traders that already exist), to sign up for an email account, to email subordinates, to hire freelancers (or even permanent employees), to convert speech to text or text to speech, to call someone on the phone, to acquire computational hardware on the cloud, or to copy over one’s source code. If an AI system needed to get its cat into a cat carrier, it could hire someone on TaskRabbit to do it like anyone else.

3。爱因斯坦猫的论点

爱因斯坦可能可以刺激一只猫,但他主要是通过利用自己的身体力量来做到这一点,而他比普通人的智力优势无济于事。这表明超人AI在实践中不会太强大。

如果您有时间设置运营条件时间表。

不过,更重要的是人类不是猫。我们更加社会和协作,我们通常将行为基于抽象思想和推理链。这使人说服(或雇用,勒索等)比说服猫更容易,仅使用语音或文字渠道而没有身体威胁。这些都不以任何明显的方式依赖于敏捷性或勇敢。

4。来自emus

When the Australian military attempted to massacre emus in the 1930s, the emus outmaneuvered them. Again, this suggests that superhuman AI systems are less likely to be able to win conflicts with humans.

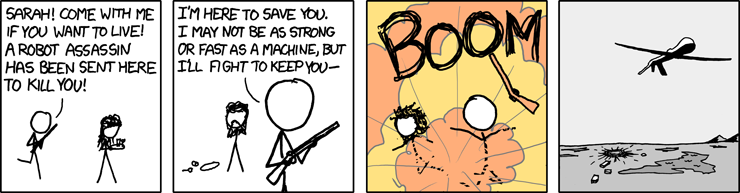

科幻小说经常描绘人类与双方都有机会获胜的机器之间的战争,因为这使得戏剧更好。我认为XKCDdoes a better job of depicting how this would look:

反复的遭遇偏爱更聪明和自适应的政党;我们从与俱乐部和猫打架的老鼠到与陷阱,毒药和节育措施作斗争,如果我们不担心可能的下游效果,我们可能会设计一个杀死所有人的生物武器。4

5。斯拉夫悲观主义的论点

“We can’t build anything right. We can’t even build a secure webcam. So how are we supposed to solve ethics and code a moral fixed point for a recursively self-improving intelligence without fucking it up, in a situation where the proponents argue we only get one chance?”

这是不尝试这样做的一个很好的理由。应设计合理的AI安全路线图,以围绕“解决道德”或在第一次尝试时把一切正确。这是寻找制造高级AI系统的方法的想法亚博体育苹果app官方下载pursue limited tasks rather than open-ended goals,,,,making such systems corrigible, defining impact measures and building systems to have a low impact, etc. “高级ML系统对齐亚博体育苹果app官方下载“ 和不变性剂的设计主要是要寻找方法来获得超过人类AI的好处,而无需完美。

6。复杂动机的论点

Complex minds are likely to have complex motivations; that may be part of what it even means to be intelligent.

在讨论AI对齐时,通常会在两个地方出现。首先,人类的价值观和动机很复杂,关于AI应该关心的内容的简单提议可能不起作用。第二,AI系统可能会有亚博体育苹果app官方下载收敛的工具目标,无论他们要完成什么项目,他们都会观察到有一些共同的策略可以帮助他们完成该项目。5

在Omohundro的论文中可以找到一些收敛的乐器策略基本的AI驱动器。高级智力可能确实需要对世界的运作方式以及哪种策略有助于实现目标的复杂理解。但是,似乎复杂性并不需要溢出目标本身的内容。具有简单总体目标的复杂系统的想法没有任何不一致。亚博体育苹果app官方下载如果有帮助,请想象一家试图最大化其净现值的公司,这是一个简单的总体目标,但仍会导致许多复杂的组织和计划。

思考AI一致性的一种核心技能是能够可视化运行各种算法或执行各种策略的后果,而不会陷入拟人化。人们可以设计一个AI系统,以使其总体目标随时间亚博体育苹果app官方下载和情况而变化,并且看起来人类经常以这种方式工作。但是,拥有复杂或不稳定的目标并不意味着您将拥有humane goals,简单,稳定的目标是also perfectly possible。

例如:假设一个代理商正在考虑两个计划,其中一项涉及写诗歌,另一个涉及建造纸条厂,并根据所产生的预期纸卷数量进行评估(而不是任何复杂的事物激励人类)。然后,我们应该期望它更喜欢第二个计划,即使人类能够为为什么第一个“更好”构建一个精心的言语论点。

7。来自actual AI

当前的AI系统是相对简亚博体育苹果app官方下载单的数学对象,对大量数据进行训练,大多数改进的途径看起来只是添加更多数据。这似乎不是递归自我完善的秘诀。

这可能是正确的,但是“重要的是要开始考虑当今更聪明的人AI系统的不幸事件,这一点很重要”并不意味着“迫在眉睫的人类AI系统更聪明”。亚博体育苹果app官方下载我们现在应该考虑这个问题,因为它很重要,而且有relevant技术的亚博体育官网我们今天可以做得更好,不是因为我们对时间表充满信心。

(Also, thatmay not be true。)

8。Cegłowski的室友的论点

“我的室友是我一生中见过的最聪明的人。他非常聪明,他所做的只是躺在身边玩耍魔兽世界在烟囱之间。”高级AI系统的目标可能同亚博体育苹果app官方下载样没有雄心勃勃。

Humans aren’t maximizers. This suggests that we may be able to design advanced AI systems to pursue limited tasks and thereby avert the kinds of disasters Bostrom is talking about. However, immediate profit incentives may not lead us in that direction by default, if gaining an extra increment of safety means trading away some annual profits or falling behind the competition. If we want to steer the field in that direction, we need to actually start work on better formalizing “limited task.”

有明显的利润激励措施for developing systems that can solve a wider variety of practical problems more quickly, reliably, skillfully, and efficiently; there aren’t corresponding incentives for developing the perfect system for playing魔兽世界而且什么都不做。

或换句话说:默认情况下,AI系统不太可能具有有限的野心,因亚博体育苹果app官方下载为最大化比懒惰更容易指定。请注意,游戏理论,经济学和人工智能如何植根于数学形式主义,这些形式主义描述了一种试图最大化某种效用功能的代理。如果我们想要“野心有限”的A亚博体育苹果app官方下载I系统,则不足以说“也许他们的野心有限;”我们必须开始探索如何真正使它们这样做。有关此主题的更多信息,请参见“低影响”问题。Concrete Problems in AI Safety”和其他相关论文。

9。脑部手术的论点

人类无法在自己擅长神经外科手术的地方进行操作,然后迭代这一过程。

人类无法做到这一点,但这是人类和人工智能系统可能有所不同的明显方式之一!亚博体育苹果app官方下载如果人类发现一种更好的方法来构建神经元或线粒体,则可能无法自己使用它。如果AI系统发现它亚博体育苹果app官方下载可以使用Bitshifts而不是乘法来更快地进行神经网络计算,则可以将补丁推向自身,重新启动,然后开始更好地工作。或者它可以将其源代码复制到非常快速构建“儿童”代理。6

似乎有许多AI改进将以这种方式进行。如果AI系统设计更亚博体育苹果app官方下载快的硬件,或者只是购买了更多的硬件,那么它将能够更快地解决更大的问题。如果AI系统设计了亚博体育苹果app官方下载其基本学习算法的改进,那么它将能够更快地学习新领域。7

10。来自childhood

It takes a long time of interacting with the world and other people before human children start to be intelligent beings. It’s not clear how much faster an AI could develop.

A truism in project management is that nine women can’t have one baby in one month, but it’s dubious that this truism will apply to machine learning systems. AlphaGo seems like a key example here: it probably played about as many training games of Go as Lee Sedol did prior to their match, but was about two years old instead of 33 years old.

Sometimes, artificial systems have access to tools that people don’t. You probably can’t determine someone’s heart rate just by looking at their face and restricting your attention to particular color channels, but software with a webcam can. You probably can’t invert rank ten matrices in your head, but software with a bit of RAM can.

在这里,我们谈论的是一个更像老式且经验丰富的人。例如,考虑一位老医生;假设他们在二十年的时间里每天有20名患者在250个工作日中有250名患者。这可以进行100,000次患者就诊,这似乎是与英国NHS在3.6小时内与英国NHS互动的人数。如果我们在一年的NHS数据上训练机器学习医生系统,那将相当于五万年的医疗经验亚博体育苹果app官方下载,这在一年的过程中就获得了。

11。来自吉利根岛

尽管我们经常将情报视为个人思想的财产,但文明权力来自汇总的智力和经验。一个人独自工作的天才做不到。

This seems reversed. One of the properties of digital systems is that they can integrate with each other more quickly and seamlessly than humans can. Instead of thinking about a server farm AI as one colossal Einstein, think of it as an Einstein per blade, and so a single rack can contain multiple villages of Einsteins all working together. There’s no need to go through a laborious vetting process during hiring or a talent drought; expanding to fill more hardware is just copying code.

如果我们考虑到一个事实,每当一个爱因斯坦有洞察力或学习新技能时,可以迅速传播给所有其他节点,那么这些爱因斯坦可以在获得全新的计算能力时就可以旋转全新的计算能力,这一事实,and the fact that the Einsteins can use all of humanity’s accumulated knowledge as a starting point, the server farm begins to sound rather formidable.

(iii) Outside-view arguments

接下来,外视论点 - 摘要应由“如果您认真对待超级智能,……”:

12。来自宏伟的争论

…真正的价值受到威胁。

它的奇怪,,,,by the Copernican principle, that our time looks as pivotal as it does. But while we should start off with a low prior on living at a pivotal time, we know that pivotal times have existed before,8而且,如果我们看到足够的证据指向那个方向,我们最终应该能够相信我们正在生活。

13。来自Megalomania的论点

…truly massive amounts of power are at stake.

从长远来看,我们显然应该试图将AI用作杠杆来改善感人的福利,无论哪种方式在技术上都是可行的。As suggested by the “we aren’t going to solve all of ethics in one go” point, it would be very bad if the developers of advanced AI systems were overconfident or overambitious in what tasks they gave the first smarter-than-human AI systems. Starting with谦虚,无开放的目标是一个好主意 - 不是因为信号谦卑很重要,而是因为谦虚的目标可能更容易正确(并且不太危险地犯错)。

14。来自transhuman voodoo

…其他许多奇怪的信念立即遵循。

信念通常是因为它们是受类似的基本原则驱动的,但它们仍然是截然不同的信念。当然,可以相信AI的对齐非常重要,并且银河扩张主要是一种无利可图的废物,或者相信AI对齐很重要,并且也认为分子纳米技术是不可行的。

也就是说,每当我们看到认知工作是主要阻滞剂的技术时,就可以合理地期望AI所采用的轨迹将对该技术产生重大影响。如果您在科学方法的早期或工业革命的曙光中写作,那么世界的准确模型将要求您至少做出一些极端的预测。我们可以辩论AI是否会如此大,但是如果这么大的交易,那么没有任何极端的未来派影响会很奇怪。

15。宗教的论点2.0

…you’ll be joining something like a religion.

人们有偏见,我们应该担心可能会影响我们的偏见的想法;但是,我们不能利用偏见的存在来忽略所有对象级别的考虑,并得出自信的技术预测。俗话说,仅仅因为您的偏执并不意味着他们并不是要带您。医学和宗教都有望治愈病人,但是医学实际上可以做到这一点。为了区分医学科学和宗教,您必须查看这些论点和结果。

16。漫画伦理的论点

…您最终会得到一个英雄综合体。

我们希望在研究这些问题的研究社区中有更大的一部分,以使成功的亚博体育官网几率增加 - 重要的是,AI系统以负责任和谨慎的方式开发,而不是为了开发这些系统而获得信誉的人。亚博体育苹果app官方下载如果您现在开始解决这个问题,您可能会最终会遇到一个英雄综合体,但是运气好,十年后,它会感觉像正常的研究(尽管在某些特别重要的问题上)。亚博体育官网

17。仿真发烧的论点

…您会相信我们可能生活在模拟中而不是基本现实中。

我个人认为模拟假说令人难以置信,因为我们的宇宙在时空中看起来既有边界又连续或连续。如果我们的宇宙看起来更像是我的世界,那么这似乎更有可能。(It seems that the first can’t easily simulate itself, whereas the second can, with a slowdown. The “RAM constraints” that are handwaved away with the simulation hypothesis are probably the core objection.) In either case, I don’t think this is a good argument for or against AI safety engineering as a field.9

18。来自data hunger

…您需要捕获每个人的数据。

这似乎与AI的一致性无关。是的,人们建立人工智能系统希望数据能够对其系统进亚博体育苹果app官方下载行训练,并弄清楚如何从道德上获得数据而不是快速获取数据应该是优先事项。但是,如何有一天会有比人类AI系统更聪明的人的看法,以及为了使他们的偏好与我们的偏好保持一致,转移人们对道德数据获取实践的看法需要多少工作?亚博体育苹果app官方下载

19。程序员的字符串理论的论点

…您将从现实中分离为抽象思想。

很难测试有关高级AI系统的预测的事实是一个巨大的问题。亚博体育苹果app官方下载至少Miri的研究基础是试图降低我们最终在天空中建造亚博体育官网城堡的风险。这是追求问题的多个攻击角度的一部分,鼓励该领域的更多多样性,重点关注在各种可能的系统上存在的问题,并优先考虑非正式和半身系统要求的形式化。亚博体育苹果app官方下载10Quoting Eliezer Yudkowsky:

结晶想法和政策,以便其他人可以批评他们。这是询问的另一点:“我该如何使用无限的计算能力?”如果您有点挥手说:“好吧,也许我们可以应用这种机器学习算法和该机器学习算法,结果将是blah-blahblah,没有人能说服您您错了。当您使用无限制的计算能力时,您可以使这些想法变得足够简单,以至于人们可以将它们放在白板上,然后“错误”,而您别无选择,只能同意。这是不愉快的,但这是该领域取得进步的方式之一。

看“美里的方法有关无限分析方法的更多信息。这Amodei/Olah AI safety agenda使用其他启发式方法,专注于在当下和近乎未来系统中更易于解决的开放问题,但这似乎仍然与扩展系统有关。亚博体育苹果app官方下载

20.激励疯狂的论点

…您会鼓励自己和他人疯狂。

Crazier ideas may make more headlines, but I don’t get the sense that they attract more research talent or funding. Nick Bostrom’s ideas are generally more reasoned-through than Ray Kurzweil’s, and the research community is correspondingly more interested in engaging with Bostrom’s arguments and pursuing relevant technical research. Whether or not you agree with Bostrom or think the field as a whole is doing useful work, this suggests that relatively important and thoughtful ideas are attracting more attention from research groups in this space.

21。来自AI cosplay

…你就更有可能试图操纵的人and seize power.

我认为we agree about the hazards of treating people as pawns,behaving unethically in pursuit of some greater good,,,,etc. It’s not clear to me that people interested in AI alignment are atypical on this dimension relative to other programmers, engineers, mathematicians, etc. And as with other outside-view critiques, this shouldn’t represent much of an update about how important AI safety research is; you wouldn’t want to decide how many research dollars to commit to nuclear security and containment based primarily on how impressed you were with Leó Szilárd’s temperament.11

22。炼金术士的论点

…you’ll be acting too soon, before we understand how intelligence really works.

While it seems unavoidable that the future holds surprises, of how and what and why, it seems like there are some things that we can identify as irrelevant. For example, the mystery of consciousness seems orthogonal to the mystery of problem-solving. It’s possible that the use of a problem-solving procedure on itself is basically what consciousness is, but it’s also possible that we can make an AI system that is able to flexibly achieve its goals without understanding what makes us conscious, and without having made it conscious in the process.

(iv)生产性讨论

现在,我已经介绍了这些观点,有一些空间可以讨论我认为生产性讨论的工作方式。为此,我赞扬Cegłowski在提出Bostrom的完整论点方面做得很好,尽管我认为他失去了一些小点。(例如,Bostrom并不声称所有一般性智能都希望自我爆发以更好地实现其目标;他只是声称这是许多目标的有用子,如果可行。)

在某些问题中,我们可以严重依靠实验和观察,以得出正确的结论,以及我们需要更加依赖论证和理论的其他问题。例如,在建造沙堡时,检验假设的成本很低。但是,在设计飞机时,全面的经验测试的成本更高,部分原因是,在足够不良设计的情况下,测试飞行员很有可能死亡。存在风险是该频谱的极端,因此我们必须特别依赖抽象论点(尽管当然,我们仍然可以在可能的情况下测试可测试的预测来获得。

当我们被迫依靠它们时,有用的口头论点的关键特性是,它们更有可能在结论是正确而不是结论是错误的世界的世界中工作。一个人可以像小丑一样轻易地对着“ 2+2 = 4”的小丑平衡,他说“ 2+2 = 5”,而论点“您所说的暗示0 = 1”才有用第二个小丑。“0=1” is a useful counterargument to “2+2=5” because it points directly to a specific flaw (subtract 2 from both sides twice and you’ll get a contradiction), and because it is much less persuasive against truth than it is against falsehood.

这使我对外面观察的论点感到怀疑,因为它们太容易平衡了正确的非典型观点。假设诺曼·博劳格(Norman Borlaug)had predicted that he would save a billion lives, and this had been rejected on the outside view — after all, very few (if any) other people could credibly claim the same across all of history. What about that argument is distinguishing between Borlaug and any other person? When experiments are cheap, it’s acceptable to predictably miss every “first,” but when experiments aren’t cheap, this becomes a fatal flaw.

就我们的目标是互相帮助,拥有更准确的信念,我也认为对我们努力确定相互的“症结”很重要。对于任何给定的分歧,您认为关于世界的命题是否真实,我认为是错误的,如果您改变了这个主意,您会遇到我的观点,反之亦然?

通过寻找这些关键,我们可以更仔细,更彻底地寻找有关最重要的问题的证据和论点,而不是迷失方面的问题。就我而言,如果我停止相信以下任何命题(您可能已经同意的某些命题),我将对您的论点更加同情:

- 代理商的价值和能力水平是正交的,因此可以在不增长仁慈的情况下增长权力。

- Ceteris Paribus,更多的计算能力会导致更多的力量。

- 更具体地说,更多的计算能力可以对自我改善有用,这可能会导致积极的反馈回路,而比几年要比几年更接近数周的两倍。

- 有强大的经济激励措施创建(大约)最大化其分配的目标函数的自主代理。

- Our capacities for empathy, moral reasoning, and restraint rely to some extent on specialized features of our brain that aren’t indispensable for general-purpose problem-solving, such that it would be a simpler engineering challenge to build a general problem solver without empathy than with empathy.12

显然,这并不是一个详尽的列表,我们需要更长的来回才能提出我们都同意至关重要的列表。

- As examples, see, e.g.,斯图尔特罗素(Berkeley),弗朗西斯卡罗西(IBM),Shane Legg(Google DeepMind),埃里克·霍维茨(Eric Horvitz)(微软),Bart Selman(康奈尔),伊利亚·萨斯克佛(Openai),安德鲁·戴维森(Andrew Davison)(伦敦帝国学院),大卫·麦卡莱斯特(David McAllester)(ttic),尤尔根Schmidhuber(IDSIA), and杰弗里欣顿(多伦多大学)。↩

- 看什么是智力?有关这个想法的更多信息,以及一般而言的术语。↩

- “没有命题欧几里得, /没有教科书知道的公式, /会从外套上旋转子弹, /或沃尔瓦尔的向下打击。”↩

- 考虑另一个害虫:蚊子。人们可以指出,蚊子的持续存在是证据表明,上等智力与蚊子的速度和飞行不匹配,或者他们的每位女性产卵的能力是不符合的。除了我们最近开发了释放遗传改良的蚊子的能力,并有可能驱动物种灭绝的能力。该方法是给雄性蚊子一个基因,使它们只有儿子,他们也将拥有该基因,也只有儿子,直到最终的雌性蚊子数量太小而无法支撑整体人群。

人类对其他物种的主导地位并不是完美的,但是随着我们积累更多的知识并开发新技术,它似乎确实在迅速发展。这表明,超人擅长的科学研究和工程可能会更快地提高主导地位,并达到更高的绝对优势水平。亚博体育官网人类冲突的历史提供了丰富的数据,即使在技术能力方面的微小差异也可以为一组人类比另一组人具有决定性的优势。↩

- 例如,对于几乎每个目标,您都可以预测,在继续运营的世界中,您将比停止操作的世界实现更多。这自然意味着您应该试图防止人们关闭您,即使您没有使用任何形式的自我保护目标进行编程 - 保持网上是有用的。↩

- 中的一个挑战AI Alignment Res亚博体育官网earch的内容是弄清楚如何以一种不涉及改变您的想法的方式来做到这一点。↩

- 硬件和软件改进只能使您走得太远 - 宇宙中的所有计算不足以通过Brute Force破坏2048位RSA键。但是人类的创造力并不是我们能够快速考虑大量数量的能力,并且有很多理由相信the algorithms we’re running will scale。↩

- 罗宾·汉森(Robin Hanson)pointsto the evolution of animal minds, the evolution of humans, the invention of agriculture, and industrialism as four previous ones. The automation of intellectual labor seems like a strong contender for the next one.↩

- I also disagree with “But if you believe this, you believe in magic. Because if we’re in a simulation, we know nothing about the rules in the level above. We don’t even know if math works the same way — maybe in the simulating world 2+2=5, or maybe 2+2=?.” This undersells the universality of math. We might not know how the physical laws of any universe simulating us relates to our own, but mathematics isn’t pinned down by physical facts that could vary from universe by universe.↩

- 与坚持纯粹的非正式猜测或在我们确信捕获非正式要求之前对其进行非常具体的正式框架进行钻探。↩

- 由于Cegłowski认可了Stanislaw Lem的写作,因此我将撰写Lem的报价这Investigation,,,,published in 1959:

Once they begin to escalate their efforts, both sides are trapped in an arms race. There must be more and more improvements in weaponry, but after a certain point weapons reach their limit. What can be improved next? Brains. The brains that issue the commands. It isn’t possible to make the human brain perfect, so the only alternative is a transition to mechanization. The next stage will be a fully automated headquarters equipped with electronic strategy machines. And then a very interesting problem arises, actually two problems. McCatt called this to my attention. First, is there any limit on the development of these brains? Fundamentally they’re similar to computers that can play chess. A computer that anticipates an opponent’s strategy ten moves in advance will always defeat a computer that can think only eight or nine moves in advance. The more far-reaching a brain’s ability to think ahead, the bigger the brain must be. That’s one.”

…

“战略考虑决定了越来越大的机器的构建,无论我们是否喜欢,这不可避免地意味着大脑中存储的信息量增加。反过来,这意味着大脑将稳步增加对社会所有集体过程的控制。大脑将决定在哪里找到臭名昭著的按钮。或是否改变步兵制服的风格。或是否增加某种钢的产量,要求拨款以实现其目的。创建了这种大脑后,您必须倾听它。如果议会浪费时间辩论是否授予其要求的拨款,另一方可能会获得领先,因此在废除议会决定之后,不可避免地会产生领导。人类对大脑决策的控制将与其积累知识的增加成比例减少。我会让自己清晰吗?将有两个长大的大脑,一个在海洋的每一侧。 What do you think a brain like this will demand first when it’s ready to take the next step in the perceptual race?”

“其能力的提高。”

…

“不,首先,它需要自己的扩展 - 也就是说,大脑变得更大!接下来会增加能力。”

“换句话说,您预测世界将结束棋盘,我们所有人都将被两个机械玩家在永恒的游戏中操纵。”This doesn’t mean Lem would endorse this position, but does show that he was thinking about these kinds of issues.↩

- 镜像神经元,,,,for example, may be important for humans’ motivational systems and social reasoning without being essential for the social reasoning of arbitrary high-capability AI systems.↩

你喜欢这个帖子吗?You may enjoy our otheryabo app posts, including: