MIRI’s mission is “to ensure that the creation of smarter-than-human artificial intelligence has a positive impact.” How can we ensure any such thing? It’s a daunting task, especially given that we don’t have any smarter-than-human machines to work with at the moment. In the previous post I discussed four背景要求that motivate our mission; in this post I will describe our approach to addressing the challenge.

This challenge is sizeable, and we can only tackle a portion of the problem. For this reason, we specialize. Our two biggest specializing assumptions are as follows:

我们专注于最初创建的比人类智能更聪明的场景从头software systems (as opposed to, say, brain emulations).

这部分是因为似乎很难在某人逆转工程之前,将大脑使用算法并在软件系统中使用它们,这似乎很困难,部分原因是我们期望任何高度可靠的AI系统都需要亚博体育苹果app官方下载至少要为了安全性和透明度而从头开始构建一些组件。然而,早期的超级智能系统将不会是人为设计的软件是非常合理的,而且我强烈认可研究侧重于降低其他途径风险的研究计划。亚博体育官网亚博体育苹果app官方下载

We specialize almost entirely in technical research.

We select our researchers for their proficiency in mathematics and computer science, rather than forecasting expertise or political acumen. I stress that this is only one part of the puzzle: figuring out how to build the right system is useless if the right system does not in fact get built, and ensuring AI has a positive impact is not simply a technical problem. It is also a global coordination problem, in the face of short-term incentives to cut corners. Addressing these non-technical challenges is an important task that we do not focus on.

简而言之,Miri进行技术研究,以确保亚博体育官网从头AI软件系统将产生积极影亚博体育苹果app官方下载响。我们没有进一步区分不同类型的AI软件系统,也没有对我们期望AI系统获得超智能的速度的准确主张。亚博体育苹果app官方下载相反,我们当前的方法是使用以下问题选择开放问题:

即使挑战要简单得多,我们仍然无法解决什么?

例如,即使我们有很多计算能力和非常简单的目标,我们也可以研究无法解决的AI对齐问题。

We then filter on problems that are (1) tractable, in the sense that we can do productive mathematical research on them today; (2) uncrowded, in the sense that the problems are not likely to be addressed during normal capabilities research; and (3) critical, in the sense that they could not be safely delegated to a machine unless we had first solved them ourselves. (Since the goal is to design intelligent machines, there are many technical problems that we can expect to eventually delegate to those machines. But it is difficult to trust an unreliable reasoner with the task of designing reliable reasoning!)

这三个过滤器通常没有争议。这里有争议的说法是上述问题:“即使挑战更简单,我们将无法解决什么?”- 是开放技术问题的生成器,解决方案将在将来帮助我们设计更安全,更可靠的AI软件,而不管其建筑如何。本文的其余部分致力于证明这一主张,并描述其背后的原因。

1. Creating a powerful AI system without understanding why it works is dangerous.

机器超级智能的大部分风险来自人们建立的可能性systems that they do not fully understand。

Currently, this is commonplace in practice: many modern AI researchers are pushing the capabilities of deep neural networks in the absence of theoretical foundations that describe why they’re working so well or a solid idea of what goes on beneath the hood. These shortcomings are being addressed over time: many AI researchers are currently working on transparency tools for neural networks, and many more are working to put theoretical foundations beneath deep learning systems. In the interim, using trial and error to push the capabilities of modern AI systems has led to many useful applications.

什么时候designing a superintelligent agent, by contrast, we will want an unusually high level of confidence in its safety前we begin online testing: trial and error alone won’t cut it, in that domain.

为了说明,请考虑一项研究Bird and Layzell在2002年。They used some simple genetic programming to design an oscillating circuit on a circuit board. One solution that the genetic algorithm found entirely avoided using the built-in capacitors (an essential piece of hardware in human-designed oscillators). Instead, it repurposed the circuit tracks on the motherboard as a radio receiver, and amplified an oscillating signal from a nearby computer.

This demonstrates that powerful search processes can often reach their goals via unanticipated paths. If Bird and Layzell were hoping to use their genetic algorithm to find code for a robust oscillating circuit — one that could be used on many different circuit boards regardless of whether there were other computers present — then they would have been sorely disappointed. Yet if they had tested their algorithms extensively on a virtual circuit board that captured all the features of the circuit board that theythoughtwere relevant (but not features such as “circuit tracks can carry radio signals”), then they would not have noticed the potential for failure during testing. If this is a problem when handling simple genetic search algorithms, then it will be a much larger problem when handling smarter-than-human search processes.

什么时候it comes to designing smarter-than-human machine intelligence, extensive testing is essential, but not sufficient: in order to be confident that the system will not find unanticipated bad solutions when running in the real world, it is important to have a solid understanding of how the search process works and why it is expected to generate only satisfactory solutions此外进行经验测试数据。

Miri的研究亚博体育官网计划旨在确保我们拥有在部署之前检查和分析比人类更明智的搜索过程所需的工具。

By analogy, neural net researchers could probably have gotten quite far without having any formal understanding of probability theory. Without probability theory, however, they would lack the tools needed to understand modern AI algorithms: they wouldn’t know about Bayes nets, they wouldn’t know how to formulate assumptions like “independent and identically distributed,” and they wouldn’t quite know the conditions under which Markov Decision Processes work and fail. They wouldn’t be able to talk about priors, or check for places where the priors are zero (and therefore identify things that their systems cannot learn). They wouldn’t be able to talk about bounds on errors and prove nice theorems about algorithms that find an optimal policy eventually.

他们仍然可能会变得非常远(and developed half-formed ad-hoc replacements for many of these ideas), but without probability theory, I expect they would have a harder time designing highly reliable AI algorithms. Researchers at MIRI tend to believe that similarly large chunks of AI theory are still missing, andthoseare the tools that our research program aims to develop.

2.即使是通过蛮力,我们也无法创建有益的AI系统。亚博体育苹果app官方下载

Imagine you have a Jupiter-sized computer and a very simple goal: Make the universe contain as much diamond as possible. The computer has access to the internet and a number of robotic factories and laboratories, and by “diamond” we mean carbon atoms covalently bound to four other carbon atoms. (Pretend we don’t care how it makes the diamond, or what it has to take apart in order to get the carbon; the goal is to study a simplified problem.) Let’s say that the Jupiter-sized computer is running python. How would you program it to produce lots and lots of diamond?

就目前而言,我们尚不知道如何对计算机进行编程以实现这样的目标。

We couldn’t yet create an artificial general intelligence通过蛮力,这表明问题的某些部分我们尚未理解。

我们有许多AI任务可以蛮力。例如,我们可以编写一个程序真的,真的很好在解决计算机视觉问题:如果我们有一个indestructible box that produced pictures and questions about them, waited for answers, scored the answers for accuracy, and then repeated the process, then we know how to write the program that interacts with that box and gets very good at answering the questions. (The program would essentially be a bounded version ofAIXI。)

By a similar method, if we had an indestructible box that produced a conversation and questions about it, waited for natural-language answers to the questions, and scored them for accuracy, then again, we could write a program that would get very good at answering well. In this sense, we know how to solve computer vision and natural language processing by brute force. (Of course, natural-language processing is nowhere near “solved” in a practical sense — there is still loads of work to be done. A brute force solution doesn’t get you very far in the real world. The point is that, for many AI alignment problems, we haven’t even made it to the “we could brute force it” level yet.)

为什么在上面的示例中需要坚不可摧的盒子?因为现代蛮力解决方案的工作方式是将每台图灵机(达到一定的复杂性极限)视为关于盒子的假设,看到哪些与观察一致,然后执行导致高分出现的动作盒子(如其余假设所预测的,以简单性加权)。

每个假设都是不透明的图灵机,并且算法永远不会窥视内部:它只是要求每个假设预测盒子执行某个动作链,盒子将输出什么得分。这意味着,如果算法(通过详尽的搜索)找到一个计划最大化the score coming out of the box, and the box is destructible, then the opaque action chain that maximizes score is very likely to be the one that pops the box open and alters it so that it always outputs the highest score. But given an indestructible box, we know how to brute force the answers.

In fact, roughly speaking, we understand how to solveanyreinforcement learning problem via brute force. This is a far cry from knowing how to几乎解决强化学习问题!但这确实说明了两种类型的问题之间的种类差异。我们可以(不完美和启发性地)将AI问题划分如下:

AI中有两种类型的开放问题。人们正在弄清楚如何在我们知道如何在原则上解决的实践问题解决。另一个正在弄清楚如何解决我们甚至不知道如何违反武力的原则问题。

MIRI focuses on problems of the second class.1

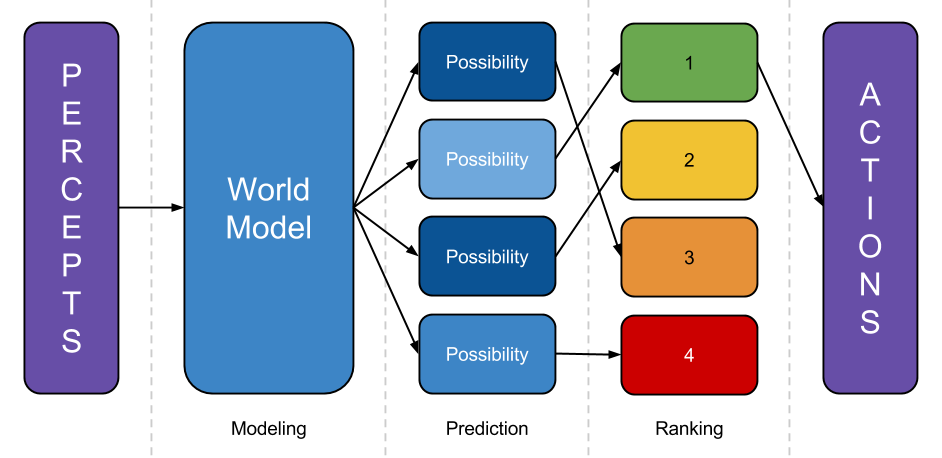

What is hard about brute-forcing a diamond-producing agent? To illustrate, I’ll give a wildly simplified sketch of what an AI program needs to do in order to act productively within a complex environment:

- Model the world: Take percepts, and use them to refine some internal representation of the world the system is embedded in.

- Predict the world: Take that world-model, and predict what would happen if the system executed various different plans.

- 排名结果:将这些可能性评为预测的良好,然后执行导致高分结果的计划。2

Consider the modeling step. As discussed above, we know how to write an algorithm that finds good world-models by brute force: it looks at lots and lots of Turing machines, weighted by simplicity, treats them like they are responsible for its observations, and throws out the ones that are inconsistent with observation thus far. But (aside from being wildly impractical) this yields onlyopaque假设:系统可以询问每个图灵机输出亚博体育苹果app官方下载的“感觉钻头”,但它无法窥视内部并检查内部表示的对象。

If there is some well-defined “score” that gets spit out by the opaque Turing machine (as in a reinforcement learning problem), then it doesn’t matter that each hypothesis is a black box; the brute-force algorithm can simply run the black box on lots of inputs and see which results in the highest score. But if the problem is to build lots of diamond in the real world, then the agent must work as follows:

- 建立一个世界的模型 - 代表碳原子和共价键的模型。

- Predict how the world would change contingent on different actions the system could execute.

- Lookinsideeach prediction and see which predicted future has the most diamond. Execute the action that leads to more diamond.

换句话说,构建以可靠影响的AIthings in the worldneeds to have world-models that are amenable to inspection. The system needs to be able to pop open the world model, identify the representations of carbon atoms and covalent bonds, and estimate how much diamond is in the real world.3

我们还没有一个清晰的照片如何构建“inspectable” world-models — not even by brute force. Imagine trying to write the part of the diamond-making program that builds a world-model: this function needs to take percepts as input and build a data structure that represents the universe, in a way that allows the system to inspect universe-descriptions and estimate the amount of diamond in a possible future. Where in the data structure are the carbon atoms? How does the data structure allow the concept of a “covalent bond” to be formed and labeled, in such a way that it remains accurate even as the world-model stops representing diamond as made of atoms and starts representing them as made of protons, neutrons, and electrons instead?

我们需要一个国际算法构建multi-level representations of the world and allows the system to pursue the same goals (make diamond) even as its model changes drastically (because it discovers quantum mechanics). This is in stark contrast to the existing brute-force solutions that use opaque Turing machines as hypotheses.4

什么时候humans关于宇宙的原因,我们似乎从中间做出某种推理:我们首先建模人和岩石之类的东西,并最终意识到这些是由原子制成的,这些原子是由质子,中子和电子制成的,这些原子是量子场中的扰动。我们绝对不确定模型中的最低级别是现实中最低的水平。当我们继续思考世界时构造new hypotheses to explain oddities in our models. What sort of data structure are we using, there? How do we add levels to a world model given new insights? This is the sort of reasoning algorithm that we do not yet understand how to formalize.5

That’s step一在蛮力一个sim AI,可靠地奉行ple goal. We also don’t know how to brute-force steps two or three yet. By simplifying the problem — talking about diamonds, for example, rather than more realistic goals that raise a host of other difficulties — we’re able to factor out the parts of the problems that we don’t understand how to solve yet, even in principle. Our技术议程描述了使用此方法确定的许多开放问题。

3. Figuring out how to solve a problem in principle yields many benefits.

1836年,埃德加·艾伦·坡(Edgar Allen Poe)写了wonderful essayon Maelzel’s Mechanical Turk, a machine that was purported to be able to play chess. In the essay, Poe argues that the Mechanical Turk must be a hoax: he begins by arguing that machines cannot play chess, and proceeds to explain (using his knowledge of stagecraft) how a person could be hidden within the machine. Poe’s essay is remarkably sophisticated, and a fun read: he makes reference to the “calculating machine of Mr. Babbage” and argues that it cannot possibly be made to play chess, because in a calculating machine, each steps follows from the previous step by necessity, whereas “no one move in chess necessarily follows upon any one other”.

机械土耳其人确实被证明是一个骗局。然而,在1950年,克劳德·香农(Claude Shannon解释如何编程计算机以下棋。

香农的算法绝不是对话的结束。从该纸到Deep Blue花了46年的时间,这是一个击败人类世界冠军的实用国际象棋计划。但是,如果您配备了Poe的知识状态,并且还不确定是否是可能的for a computer to play chess — because you did not yet understand algorithms for constructing game trees and doing backtracking search — then you would probably not be ready to start writing practical chess programs.

Similarly, if you lacked the tools of probability theory — an understanding of Bayesian inference and the limitations that stem from bad priors — then you probably wouldn’t be ready to program an AI system that needed to manage uncertainty in high-stakes situations.

If you are trying to write a program and you can’t yet say how you would write it given an arbitrarily large computer, then you probably aren’t yet ready to design a practical approximation of the brute-force solution yet. Practical chess programs can’t generate a full search tree, and so rely heavily on heuristics and approximations; but if you can’t brute-force the answer yet givenarbitraryamounts of computing power, then it’s likely that you’re missing some important conceptual tools.

Marcus Hutter (inventor of AIXI) and Shane Legg (inventor of theUniversal Measure of Intelligence)似乎认可这种方法。他们的工作可以解释为如何找到如何找到任何强化学习问题的蛮力解决方案,实际上,上述如何做到这一点的描述是由于legg和hutter所致。

实际上,Google DeepMind的创始人参考Shane论文的完成是四个关键指标之一,即开始在AGI上工作的时间已经成熟:一个理论框架描述了如何解决强化学习问题原则上demonstrated that modern understanding of the problem had matured to the point where it was time for the practical work to begin.

Before we gain a formal understanding of the problem, we can’t be quite sure what the problem是。We may fail to notice holes in our reasoning; we may fail to bring the appropriate tools to bear; we may not be able to tell when we’re making progress. After we gain a formal understanding of the problem in principle, we’ll be in a better position to make practical progress.

对问题建立正式理解的目的不是runthe resulting algorithms. Deep Blue did not work by computing a full game tree, and DeepMind is not trying to implement AIXI. Rather, the point is to identify and develop the basic concepts and methods that are useful for solving the problem (such as game trees and backtracking search algorithms, in the case of chess).

The development of probability theory has been quite useful to the field of AI — not because anyone goes out and attempts to build a perfect Bayesian reasoner, but because probability theory is the unifying theory for reasoning under uncertainty. This makes the tools of probability theory useful for AI designs that vary in any number of implementation details: any time you build an algorithm that attempts to manage uncertainty, a solid understanding of probabilistic inference is helpful when reasoning about the domain in which the system will succeed and the conditions under which it could fail.

这就是为什么我们认为我们可以确定我们今天可以解决的开放问题,无论未来一般智能机器的设计如何(或到达那里需要多长时间),这些问题都将可靠地有用。通过寻找问题,即使问题要容易得多,我们也希望确定缺少核心AGI算法的地方。通过对如何在原则上解决这些问题的正式理解,我们旨在确保在解决实践中的这些问题时,程序员拥有开发他们深入了解的解决方案所需的知识,以及他们需要的工具以及他们需要的工具确保其构建的系统非常可靠。亚博体育苹果app官方下载

4. This is an approach researchers have used successfully in the past.

Our main open-problem generator — “what would we be unable to solve even if the problem were easier?” — is actually a fairly common one used across mathematics and computer science. It’s more easy to recognize if we rephrase it slightly: “can we reduce the problem of building a beneficial AI to some other, simpler problem?”

例如,您无需询问您是否可以编程木星大小的计算机生产钻石,而是可以将其重新为一个问题,即我们是否可以将钻石最大化问题减少到已知的推理和计划程序。(当前的答案是“还没有。”)

This is a fairly standard practice in computer science, where reducing one problem to another is a计算理论的关键特征。在数学中,通常可以通过将一个问题减少到另一个问题来实现证据(例如,请参见著名的案例Fermat的最后定理)。这有助于关注问题的各个部分不是解决并确定缺乏基本理解的主题。

As it happens, humans have a pretty good track record when it comes to working on problems such as these. Humanity hasn’t been very good at predicting long-term technological trends, but we have reasonable success developing theoretical foundations for technical problems decades in advance, when we put sufficient effort into it. Alan Turing and Alonzo Church succeeded in developing a robust theory of computation that proved quite useful once computers were developed, in large part by figuring out how to solve (in principle) problems which they did not yet know how to solve with machines. Andrey Kolmogorov, similarly, set out to formalize intuitive but not-yet-well-understood methods for managing uncertainty; and he succeeded. And Claude Shannon and his contemporaries succeeded at this endeavor in the case of chess.

概率理论的发展与我们的案件特别好:这是一个领域,数百年来,哲学家和数学家试图反复将自己的“不确定性”正式观念形式化为悖论和矛盾。当时的概率理论非常缺乏正式的基础,被称为“不幸理论”。然而,科尔莫戈罗夫(Kolmogorov)和其他人为正式化理论的一致努力取得了成功,他的努力启发了开发了许多有用的工具,用于设计系统,这些系统可靠地不确定性地理解。亚博体育苹果app官方下载

Many people who set out to put foundations under a new field of study (that was intuitively understood on some level but not yet formalized) have succeeded, and their successes have been practically significant. We aim to do something similar for a number of open problems pertaining to the design of highly reliable reasoners.

The questions MIRI focuses on, such as “how would one ideally handle logical uncertainty?” or “how would one ideally build multi-level world models of a complex environment?”, exist at a level of generality comparable to Kolmogorov’s “how would one ideally handle empirical uncertainty?” or Hutter’s “how would one ideally maximize reward in an arbitrarily complex environment?” The historical track record suggests that these are the kinds of problems that it is possible to both (a) see coming in advance, and (b) work on without access to a concrete practical implementation of a general intelligence.

By identifying parts of the problem that we would still be unable to solve even if the problem was easier, we hope to hone in on parts of the problem where core algorithms and insights are missing: algorithms and insights that will be useful no matter what architecture early intelligent machines take on, and no matter how long it takes to create smarter-than-human machine intelligence.

At present, there are only three people on our research team, and this limits the number of problems that we can tackle ourselves. But our approach is one that we can scale up dramatically: it has generated a very large number of open problems, and we have no shortage of questions to study.6

This is an approach that has often worked well in the past for humans trying to understand how to approach a new field of study, and I am confident that this approach is pointing us towards some of the core hurdles in this young field of AI alignment.

- Most of the AI field focuses on problems of the first class. Deep learning, for example, is a very powerful and exciting tool for solving problems that we know how to brute-force, but which were, up until a few years ago, wildly intractable. Class 1 problems tend to be important problems for building more capable AI systems, but lower-priority for ensuring that highly capable systems are aligned with our interests.↩

- In reality, of course, there aren’t clean separations between these steps. The “prediction” step must be more of a ranking-dependent planning step, to avoid wasting computation predicting outcomes that will obviously be poorly-ranked. The modeling step depends on the prediction step, because which parts of the world-model are refined depends on what the world-model is going to be used for. A realistic agent would need to make use of meta-planning to figure out how to allocate resources between these activities, etc. This diagram is a fine first approximation, though: if a system doesn’t do something like modeling the world, predicting outcomes, and ranking them somewhere along the way, then it will have a hard time steering the future.↩

- 在加强学习问题中,通过一个特殊的“奖励渠道”避免了此问题,该问题旨在间接地站在主管想要的东西上。(例如,主管每次学习者都会采取似乎对主管制作钻石有用的行动时,都可以按下奖励按钮。建模并编程系统以执行预测的动作会导致高奖励。亚博体育苹果app官方下载这比设计世界模型的方式要容易得多,以使系统可以可靠地识别其内部碳原子和共价键的表示(尤其是如果世界是按照牛顿力学建模的,下一天是在世界上建模的亚博体育苹果app官方下载),但是接下来是量子力学的),但是doesn’t provide a framework for agents that must autonomously learn how to achieve some goal. Correct behavior in highly intelligent systems will not always be reducible to maximizing a reward signal controlled by a significantly less intelligent system (e.g., a human supervisor).↩

- 根据模型优化的搜索算法的想法facts about the worldrather than justexpected perceptsmay sound basic, but we haven’t found any deep insights (or clever hacks) that allow us to formalize this idea (e.g., as a brute-force algorithm). If we could formalize it, we would likely get a better understanding of the kind of abstract modeling of objects and facts that is required for自指,逻辑上不确定的,程序员不可忽视的推理。↩

- 我们还怀疑,用于建立多层次世界模型的蛮力算法将比Solomonoff感应归纳更适合“缩放”,因此将有一些深入了解如何在实践环境中建立多层世界模型。↩

- 例如,您可以询问我们是否可以减少对人类行为做出可靠预测的问题的问题的问题,而不是询问赋予大量计算能力的问题,而不是问赋予大量计算能力的问题:一种方法:一种方法由他人提倡。↩

Did you like this post?You may enjoy our otheryabo app 帖子,包括: