James Miller on Unusual Incentives Facing AGI Companies

James D. Milleris an associate professor of economics atSmith College. He is the author ofSingularity Rising,Game Theory at Work,and aprinciples of microeconomics textbookalong with several academic articles.

James D. Milleris an associate professor of economics atSmith College. He is the author ofSingularity Rising,Game Theory at Work,and aprinciples of microeconomics textbookalong with several academic articles.

He has a PhD in economics from the University of Chicago and a J.D. from Stanford Law School where he was onLaw Review. He is a member of cryonics providerAlcorand a research advisor to MIRI. He is currently co-writing a book on better decision making with theCenter for Applied Rationalityand will be probably be an editor on the next edition of theSingularity Hypothesesbook. He is a committed bio-hacker currently practicing or consuming apaleo diet,neurofeedback,cold thermogenesis,intermittent fasting,brain fitnessvideo games,smart drugs,bulletproof coffee, andrationalitytraining.

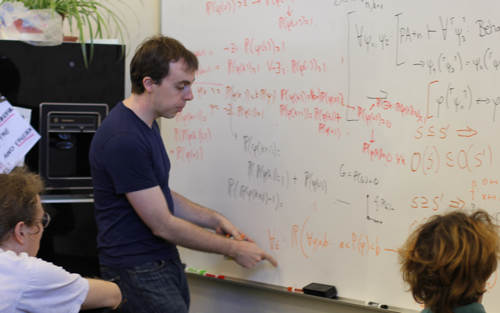

Luke Muehlhauser:Your book chapter inSingularity Hypothesisdescribes some unusual economic incentives facing a future business that is working to createAGI. To explain your point, you make the simplifying assumption that “a firm’s attempt to build an AGI will result in one of three possible outcomes”:

- Unsuccessful: The firm fails to create AGI, losing value for its owners and investors.

- Riches: The firm creates AGI, bringing enormous wealth to its owners and investors.

- Foom: The firm creates AGI but this event quickly destroys the value of money, e.g. via anintelligence explosionthat eliminates scarcity, or creates a weird world without money, or exterminates humanity.

How does this setup allow us to see the unusual incentives facing a future business that is working to create AGI?

James Miller:A huge asteroid might hit the earth, and if it does it will destroy mankind. You should be willing to bet everything you have that the asteroid will miss our planet because either you win your bet or Armageddon renders the wager irrelevant. Similarly, if I’m going to start a company that will either make investors extremely rich or create aFoomthat destroys the value of money, you should be willing to invest a lot in my company’s success because either the investment will pay off, or you would have done no better making any other kind of investment.

Pretend I want to create a controllable AGI, and if successful I will earn greatRichesfor my investors. At first I intend to follow a research and development path in which if I fail to achieveRiches, my company will beUnsuccessfuland have no significant impact on the world. Unfortunately, I can’t convince potential investors that the probability of my achievingRichesis high enough to make my company worth investing in. The investors assign too large a likelihood that other potential investments would outperform my firm’s stock. But then I develop an evil alternative research and development plan under which I have the exact same probability of achievingRichesas before but now if I fail to create a controllable AGI, an unfriendlyFoomwill destroy humanity. Now I can truthfully tell potential investors that it’s highly unlikely any other company’s stock will outperform mine.

Dear friends,

Dear friends,