Becausethe world is big,代理人可能不充分,以实现其目标,包括思考的能力。

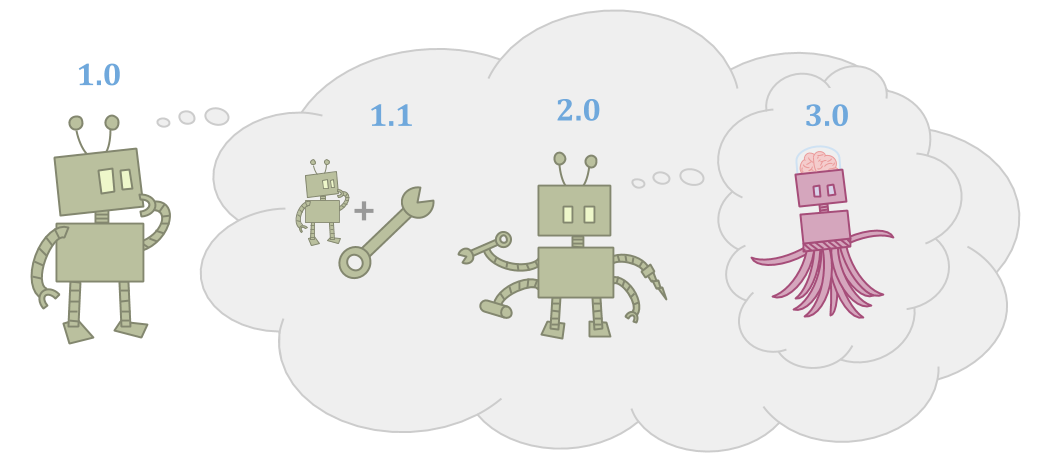

因为代理是made of parts, it can improve itself and become more capable.

Improvements can take many forms: The agent can make tools, the agent can make successor agents, or the agent can just learn and grow over time. However, the successors or tools need to be more capable for this to be worthwhile.

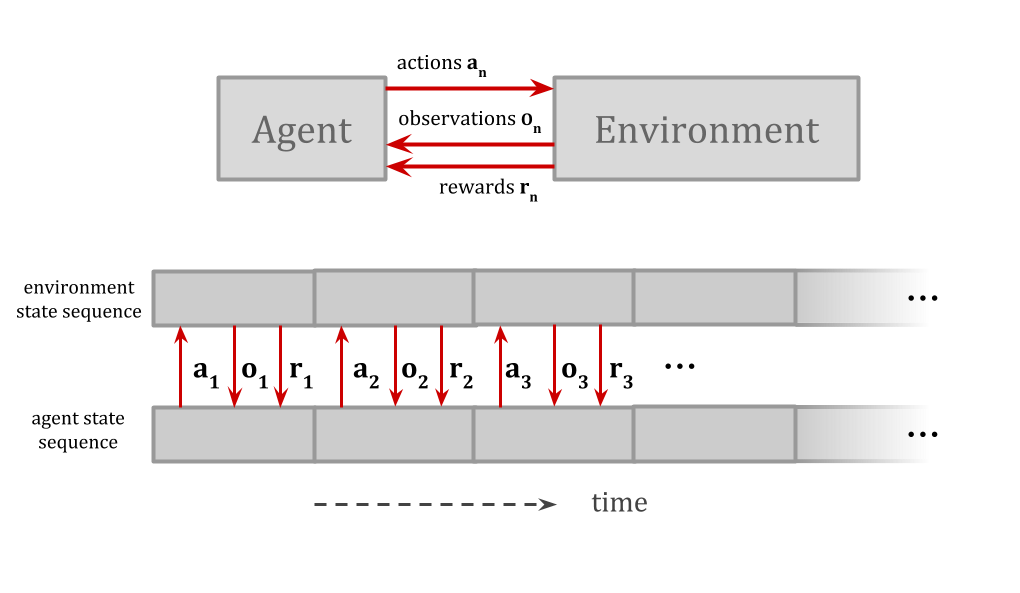

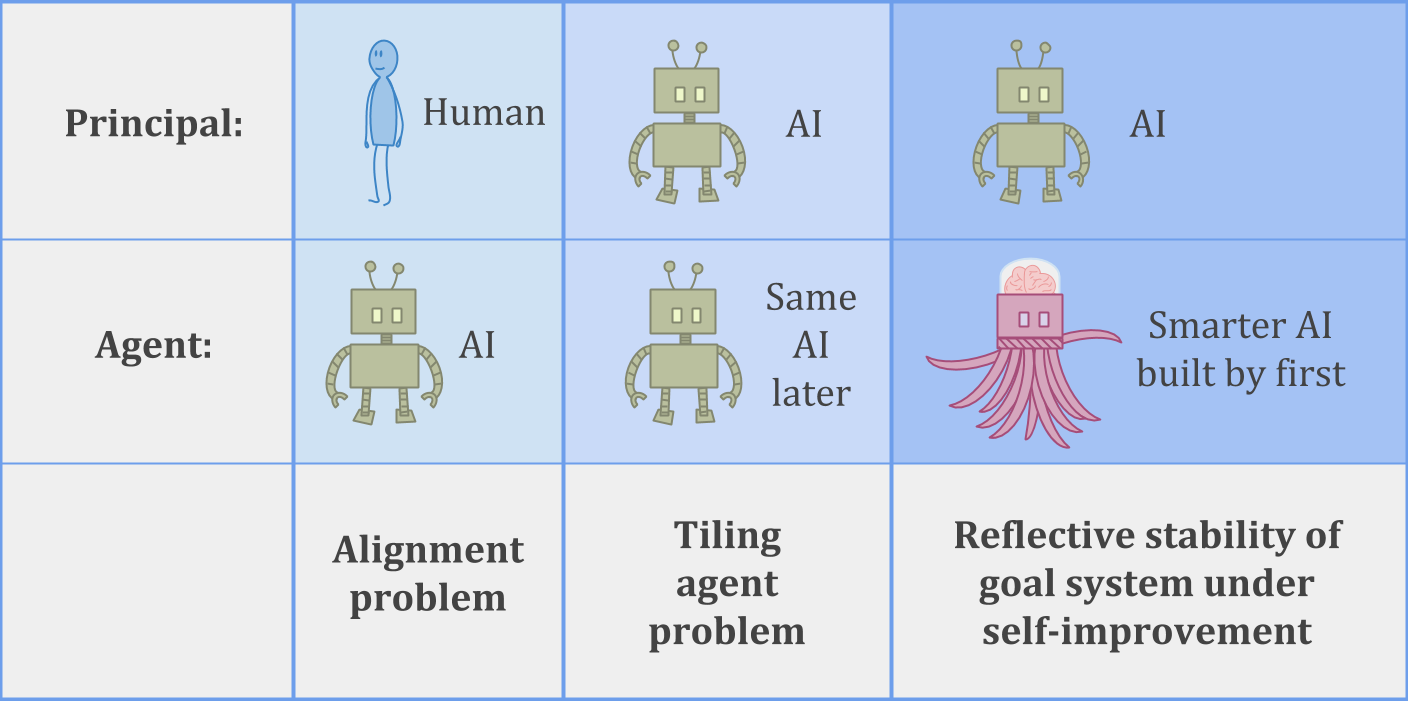

This gives rise to a special type of principal/agent problem:

You have an initial agent, and a successor agent. The initial agent gets to decide exactly what the successor agent looks like. The successor agent, however, is much more intelligent and powerful than the initial agent. We want to know how to have the successor agent robustly optimize the initial agent’s goals.

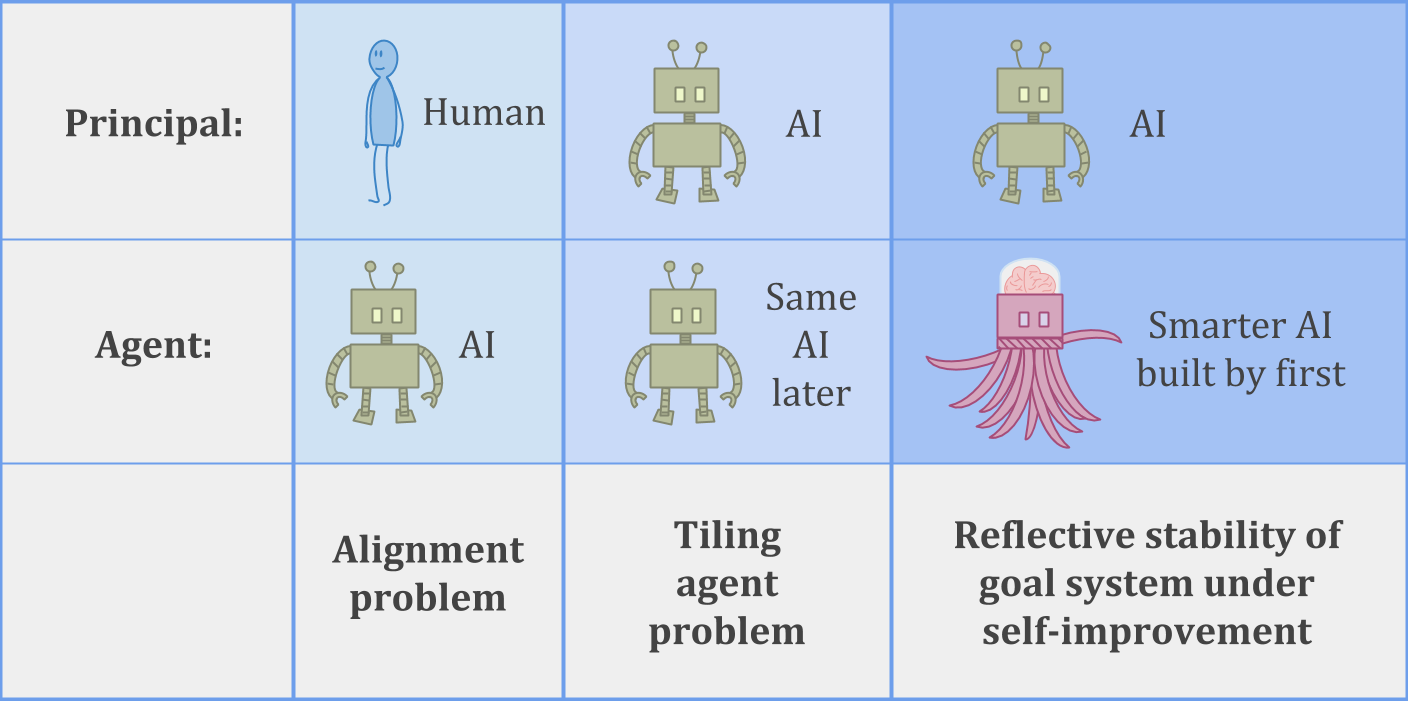

这里有三个例子的forms this principal/agent problem can take:

在里面AI alignment problem, a human is trying to build an AI system which can be trusted to help with the human’s goals.

在里面tiling agents problem, an agent is trying to make sure it can trust its future selves to help with its own goals.

Or we can consider a harder version of the tiling problem—稳定的自我改善—where an AI system has to build a successor which is more intelligent than itself, while still being trustworthy and helpful.

For a human analogy which involves no AI, you can think about the problem of succession in royalty, or more generally the problem of setting up organizations to achieve desired goals without losing sight of their purpose over time.

困难似乎是双重的:

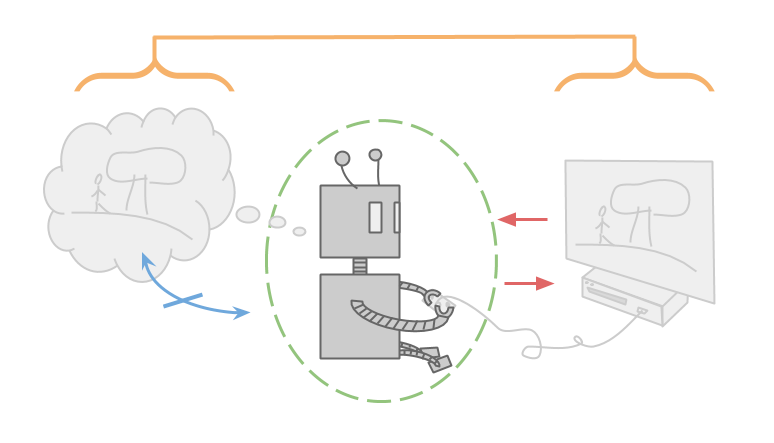

首先,人或AI代理人可能无法完全理解自己和自己的目标。如果一个代理商不能以确切的细节写出它想要的东西,这使得它很难保证其继任者会强大地帮助目标。

其次,委派工作背后的想法是你不必自己做所有的工作。您希望继承者能够以某种程度的自主行动,包括学习您不知道的新事物,并挥舞新的技能和能力。

在里面limit, a really good formal account of robust delegation should be able to handle arbitrarily capable successors without throwing up any errors—like a human or AI building an unbelievably smart AI, or like an agent that just keeps learning and growing for so many years that it ends up much smarter than its past self.

The problem is not (just) that the successor agent might be malicious. The problem is that we don’t even know what it means not to be.

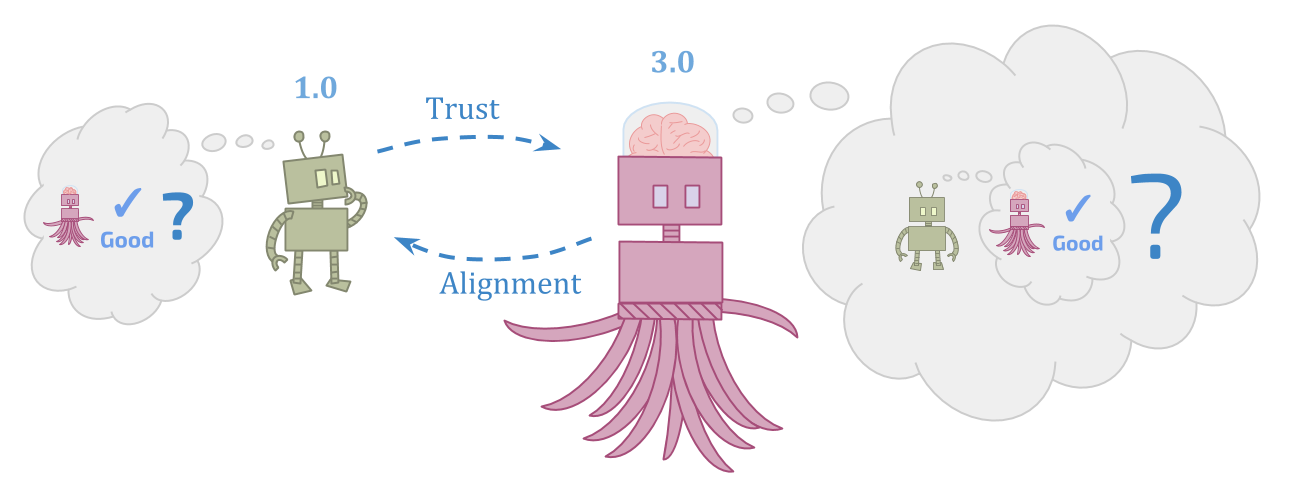

This problem seems hard from both points of view.

初始代理需要弄清楚比它更强大的可靠和值得信赖,这似乎很难。但继任者代理必须弄清楚在初始代理人甚至无法理解的情况下要做什么,并试图尊重继任者可以看到的东西的目标不一致, which also seems very hard.

At first, this may look like a less fundamental problem than “做决定“ 要么 ”have models”. But the view on which there are multiple forms of the “build a successor” problem is itself a二元view.

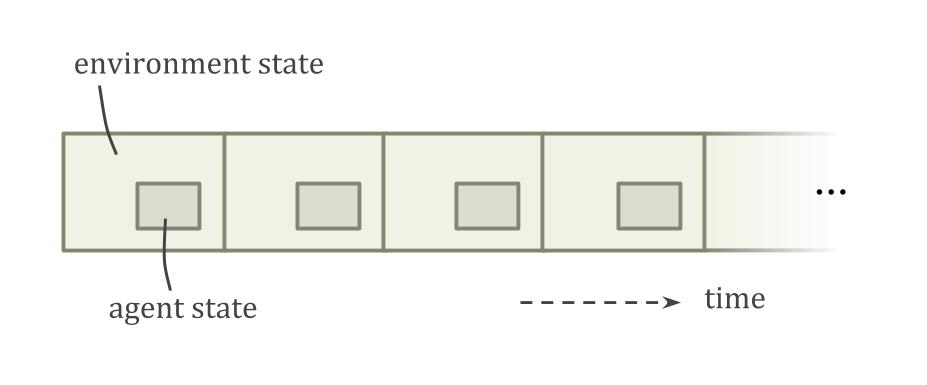

对嵌入式代理人来说,未来的自我不特权;它只是环境的另一部分。建立一个分享您的目标的后继人员之间没有深入差异,并确保您自己的目标随着时间的推移而保持相同。

所以,虽然我谈论“初始”和“继任者”代理商,但请记住,这不仅仅是关于人类目前瞄准继任者的狭隘问题。这是关于成为持续和学会随着时间的基本问题的根本问题。

我们称之为这个问题Robust Delegation。Examples include:

阅读更多 ”