Nate Soares’ recentdecision theory与本·莱文斯坦(Ben Levinstein)的纸张,“”大马士革作弊死亡,”提出了熟人的一些宝贵问题和评论(此处匿名化)。我从下面的评论者电子邮件中汇总了Nate的回答。

Nate Soares’ recentdecision theory与本·莱文斯坦(Ben Levinstein)的纸张,“”大马士革作弊死亡,”提出了熟人的一些宝贵问题和评论(此处匿名化)。我从下面的评论者电子邮件中汇总了Nate的回答。

讨论涉及功能决策理论(FDT),这是因果决策理论(CDT)和证据决策理论(EDT)的新提出的替代方案。EDT说“选择最吉祥的动作”,CDT说“选择具有最佳效果的动作”,FDT说:“选择一个人的决策算法的输出,在该算法的所有实例中都具有最佳的效果。”

FDT通常与CDT相似。我n a one-shot prisoner’s dilemma between two agents who know they are following FDT, however, FDT parts ways with CDT and prescribes cooperation, on the grounds that each agent runs the same decision-making procedure, and that therefore each agent is effectively choosing for both agents at once.1

下面,内特(Nate)提供了他自己的一些观点,说明了为什么FDT通常比CDT和EDT更高的实用程序。他在这里素描的某些立场比为FDT辩护所需的假设要强,但应该阐明为什么Miri的研究人员认为FDT可以帮助解决理性行动基础上的许多长期难题。亚博体育官网

一种nonymous:这是很棒的东西!我落后于阅读有关我的研究的大量论文和书籍,但这是我的道路并吸引了我的,这在很大程度上说明了内容和本文正在取得进亚博体育官网步的感觉有多有趣。

我的总体看法是,您是正确的,需要更详细地指定这些问题。但是,我的猜测是,一旦您这样做,游戏理论家就会得到正确的答案。也许这就是FDT:这是一种澄清模棱两可的游戏的方法,该游戏导致形式主义,像Pearl和我自己一样,我可以使用我们的标准方法来获得正确的答案。

我know there’s a lot of inertia in the “decision theory” language, so probably it doesn’t make sense to change. But if there were no such sunk costs, I would recommend a different framing. It’s not that people’s decision theories are wrong; it’s that they are unable to correctly formalize problems in which there are high-performance predictors. You show how to do that, using the idea of intervening on (i.e., choosing between putative outputs of) the algorithm, rather than intervening on actions. Everything else follows from a sufficiently precise and non-contradictory statement of the decision problem.

Probably the easiest move this line of work could make to ease this knee-jerk response of mine in defense of mainstream Bayesian game theory is to just be clear that CDT is不是旨在捕捉主流贝叶斯游戏理论。相反,它是对通常不考虑一系列问题的一种响应的模型,而现有方法是模棱两可的。

Nate Soares:我自己不接受这个观点。我的观点更像是:当您将准确的预测因子添加到Rube Goldberg机器中时,这实际上可以完成 - 该宇宙的未来可以通过预测的算法的行为来确定。The algorithm that we put in the “thing-being-predicted” slot can do significantly better if its reasoning on the subject of which actions to output respects the universe’s downstream causal structure (which is something CDT and FDT do, but which EDT neglects), and it can do better again if its reasoning also respects the world’s global logical structure (which is done by FDT alone).

我们一般不知道如何确切尊重这种更广泛的依赖性类别,但是在许多简单情况下,我们确实知道如何做。尽管它在许多简单的情况下与现代决策理论和游戏理论一致,但其处方似乎在非平凡的应用中确实有所不同。

The main case where we can easily see that FDT is not just a better tool for formalizing game theorists’ traditional intuitions is in prisoner’s dilemmas. Game theory is pretty adamant about the fact that it’s rational to defect in a one-shot PD, whereas two FDT agents facing off in a one-shot PD will cooperate.

特别是,古典游戏理论采用了“共同理性的常识”假设,当您仔细观察它时,或多或少地将其兑现为“所有当事方都使用CDT和此公理的常识”。游戏理论将共同理性的常识定义为“所有当事方都使用FDT和这个Axiom”给出了实质上不同的结果,例如一击PDS的合作。

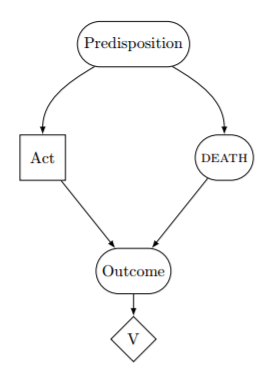

CDT药物的大马士革死亡的因果图。2

一种nonymous:过去,当我阅读Miri在CDT上的工作时,在我看来,以理性的方式描述了标准游戏理论家的意思。但是至少在谋杀病变等情况下,我认为标准游戏理论家会开处方CDT并不公平。最好说标准游戏理论不考虑这些设置,并且有多种响应方式,CDT是一个。

但是我也怀疑许多这些完美的预测问题在内部不一致,因此由于问题无法出现,因此CDT会开出什么。也就是说,当问题假设演员总是有不正确的期望时,游戏理论家会在某个问题中推荐游戏理论家并不合理。“所有代理都有正确的期望”是大多数游戏理论问题的核心属性。

CDT代理的大马士革问题中的死亡就是一个很好的例子。在这个问题中,要么死亡不会确定地找到CDT代理,要么CDT代理人永远不会对自己的行为有正确的信念,或者她将无法最佳地回应自己的信念。

So the problem statement (“Death finds the agent with certainty”) rules out typical assumptions of a rational actor: that it has rational expectations (including about its own behavior), and that it can choose the preferred action in response to its beliefs. The agent can only have correct beliefs if she believes that she has such-and-such belief about which city she’ll end up in, but doesn’t select the action that is the best response to that belief.

内特:我竞争了最后的主张。麻烦在于“最佳响应”一词,其中您正在使用CDT的概念,即是最佳响应。根据FDT的“最佳反应”概念,如果我们假设我们假设徒步前往阿勒颇,对大马士革问题死亡的信念的最佳反应是留在大马士革。

我n order to define what the best response to a problem is, we normally invoke a notion of counterfactuals — what are your available responses, and what do you think follows from them? But the question of how to set up those counterfactuals is the very point under contention.

因此,我将授予,如果您根据CDT的反事实来定义“最佳反应”,那么大马士革中的死亡排除了理性演员的典型假设。但是,如果您使用FDT的反事实(即,尊重虚拟依赖性范围的反事实),那么您就可以保留所有对理性参与者的通常假设。我们可以说,FDT具有比CDT的理论优势,它允许代理在更广泛的问题中表现出类似的观察性能。

一种nonymous:CDT中大马士革问题中死亡的介绍对我来说很奇怪。CDT也可能会出现错误,因为问题违反了其假设之一。Or it might cycle through beliefs forever… The expected utility calculation here seems to give some credence to the possibility of dodging death, which is assumed to be impossible, so it doesn’t seem to me to correctly reason in a CDT way about where death will be.

由于某种原因,我想捍卫CDT代理,并说不公平地说他们不会意识到他们的战略在这个问题上产生了矛盾(考虑到理性信念和代理的假设)。

内特:这里有一些不同的事情要注意。首先是我的倾向总是将CDT评估为算法:如果您构建了遵循CDT方程式的机器,那么itdo?

The answer here, as you’ve rightly noted above, is that the CDT equation isn’t necessarily defined when the input is a problem like Death in Damascus, and I agree that simple definitions of CDT yield algorithms that would either enter an infinite loop or crash. The third alternative is that the agent notices the difficulty and engages in some sort of reflective-equilibrium-finding procedure; variants of CDT with this sort of patch were invented more or less independently by Joyce and Arntzenius to do exactly that. In the paper, we discuss the variants that run an equilibrium-finding procedure and show that the equilibrium is still unsatisfactory; but we probably should have been more explicit about the fact that vanilla CDT either crashes or loops.

第二,我承认仍然有强劲intuition that an agent should in some sense be able to reflect on their own instability, look at the problem statement, and say, “Aha, I see what’s going on here; Death will find me no matter what I choose; I’d better find some other way to make the decision.” However, this sort of response is明确排除了by the CDT equation: CDT says you must evaluate your actions as if they were subjunctively independent of everything that doesn’t causally depend on them.

我n other words, you’re correct that CDT agents knowintellectuallythat they cannot escape Death, but the CDT equation requires agents to想象that they can, and to act on this basis.

而且,要明确的是,通过推理不可能的场景来规定行动并不是对算法的打击 - 任何确定性的算法都试图推理“应该做的事情”必须想象一些不可能,因为确定性算法必须必须确定性算法关于做很多不同事情的后果的原因,但实际上只会做一件事。

手头的问题是哪个不可能是正确的想象,声称是在具有准确的预测因素的情况下,CDT规定了想象错误的不可能,包括不可能逃脱死亡的可能性。

我们的人类直觉说,我们应该反思问题陈述,并最终意识到逃脱的死亡在某种意义上是“不可能考虑的”。但这直接与CDT的建议相矛盾。遵循这种直觉,要求我们使我们的信念遵守问题陈述中的逻辑但没有因果关系的约束(“死亡是完美的预测指标”),FDT代理可以做到,但CDT代理不能做到这一点。经过仔细检查,“ CDT不应该意识到这是错误的吗?”事实证明,直觉是另一个幌子的FDT的论点。(的确,追求这种直觉是FDT的前辈如何发现的一部分!)

第三,我会指出,总的来说,决策理论能够在明显的不一致时正确推理这是一个重要的美德。考虑以下简单示例:

一种n agent has a choice between taking $1 or taking $100. There is an extraordinarily tiny but nonzero probability that a cosmic ray will spontaneously strike the agent’s brain in such a way that they will be caused to do the opposite of whichever action they would normally do. If they learn that they have been struck by a cosmic ray, then they will also need to visit the emergency room to ensure there’s no lasting brain damage, at a cost of $1000. Furthermore, the agent knows that they take the $100 if and only if they are hit by the cosmic ray.

当面对这个问题时,EDT代理商会理由:“如果我花了100美元,那么我一定会被宇宙射线击中,这意味着我在网上损失了900美元。因此,我更喜欢$ 1。”然后,他们以1美元的价格(除了被宇宙射线击中的情况下)。

由于这正是问题陈述所说的:“代理商知道,当且仅当他们被宇宙射线击中时,他们才能花100美元” - 问题是完全一致的,EDT对问题的回答也是如此。EDT仅关心相关性,而不关心依赖性;因此,EDT代理商非常乐意购买自我实现的预言,即使这意味着要拒绝大量资金。

What happens when we try to pull this trick on a CDT agent? She says, “Like hell I only take the $100 if I’m hit by the cosmic ray!” and grabs the $100 — thus revealing your problem statement to be inconsistent if the agent runs CDT as opposed to EDT.

声称“代理商知道他们在且仅当他们被宇宙射线击中时才获得100美元的说法与CDT的定义相矛盾,这要求CDT的代理商拒绝将免费的钱留在桌子上。正如您可能验证的,FDT还出于类似的原因使问题声明不一致。另一方面,EDT的定义与所述的问题完全一致。

This means that if you try to put EDT into the above situation — controlling its behavior by telling it specific facts about itself — you will succeed; whereas if you try to put CDT into the above situation, you will fail, and the supposed facts will be revealed as lies. Whether or not the above problem statement is consistent depends on the algorithm that the agent runs, and the design of the algorithm controls the degree to which you can put that algorithm in bad situations.

我们可以将此视为FDT和CDT成功使低度性宇宙变得不可能,而EDT无法使低度性宇宙变得不可能。实施关于硬件并运行它的决策理论的重点是使我们的宇宙期货不可能(或至少不太可能)。我t’s a feature of a decision theory, and not a bug, for there to be some problems where one tries to describe a low-utility state of affairs and the decision theory says, “I’m sorry, but if you run me in that problem, your problem will be revealed as inconsistent”.3

这与您所说的任何话都没有矛盾。我说,这只是强调我们几乎没有注意到代理人的理由是不一致的情况。关于不可能的推理是决策理论产生迫使结果是可取的机制的机制,因此我们无法得出结论,从代理人被迫推理不可能的事实是从不公平的情况下置于不公平的情况下。

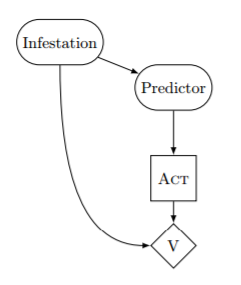

CDT代理的XOR勒索问题的因果图。4

一种nonymous:关于假设完美预测因素的决策问题对我来说仍然有些腥。如果我在XOR勒索问题中被预测为100%的准确性,那么这意味着我可以引起矛盾。如果我遵循FDT和CDT关于永不付款的建议,那么我只会在有白蚁时收到一封信。但是,如果我付款,那么我必须在没有白蚁的世界中,否则就存在矛盾。

因此,考虑到我收到了一封信,我似乎可以以改变白蚁状态的方式干预世界。也就是说,开始时最好的策略是永不付款,但是鉴于我收到一封信的最佳策略是要付款。之所以出现怪异,是因为我能够干预该算法,但是我们正在基于世界的事实依赖我的算法。

不确定这种混乱对您是否有意义。我的直觉说,至少当我们主张100%的预测性能时,这些问题通常是自相矛盾的。我宁愿从ex antesituation, with specified probabilities of getting termites, and see if it is the case that changing one’s strategy (at the algorithm level) is possible without changing the probability of termites to maintain consistency of the prediction claim.

内特:首先,我会注意到,如果预测仅为99%的时间,问题就会正常。如果“白蚁成本”和“付款成本”之间的差异足够高,那么即使预测变量仅在51%的时间内,问题也可能会通过。

也就是说,这个示例的目的是提请注意您在这里提出的一些问题,我认为当我们假设100%的预测准确性时,这些问题更容易考虑。

主张我的争议是:“也就是说,开始时最好的策略是不付款,但是最好的策略是我收到一封信是要付款。”我声称,鉴于您收到信件的最佳策略是不付款,因为您是否付款对您是否有白蚁没有影响。每当您付款时,无论您学到了什么,您基本上都只会燃烧1000美元。

也就是说,这些分你是完全正确的sion problems have some inconsistent branches, though I claim that this is true of any decision problem. In a deterministic universe with deterministic agents, all “possible actions” the agent “could take” save one are not going to be taken, and thus all “possibilities” save one are in fact inconsistent given a sufficiently full formal specification.

我还完全认可这样的说法,即这种设置允许预测的代理引起矛盾。确实,我声称全部决策能力来自引起矛盾的能力:编写算法的全部原因,该算法遍历动作,构建将从这些动作中遵循的结果模型,并输出与最高结果相对应的动作,以便于对于算法输出次优动作是矛盾的。

这就是计算机程序的全部内容。您以一种方式编写代码,以至于电力流过晶体管的唯一非矛盾方式是使您的计算机完成纳税申报表的方式。

我n the case of the XOR blackmail problem, there are four “possible” worlds:上尉(letter+termites),NT(Noletter+termites),Ln(letter+臭名昭著), andNN(Noletter+臭名昭著).

The predictor, by dint of their accuracy, has put the universe into a state where the only consistent possibilities are either (LT, NN) or (LN, NT). You get to choose which of those pairs is consistent and which is contradictory. Clearly, you don’t have control over the probability oftermitesvs.臭名昭著,因此您只能控制是否收到这封信。因此,问题是您是否愿意支付1000美元以确保仅在没有白蚁的世界中显示这封信。

即使您手中拿着这封信,我也声称您不应该说“如果我付钱,我将没有白蚁”,因为那是错误的 - 您的行动不会影响您是否有白蚁。您应该说:

我see two possibilities here. If my algorithm outputs支付,然后在XX% of worlds where I have termites I get no letter and lose $1M, and in the (100-XX)% of worlds where I do not have termites I lose $1k. If instead my algorithm outputs拒绝,然后在XX% of worlds where I have termites I get this letter but only lose $1M, and in the other worlds I lose nothing. The latter mixture is preferable, so I do not pay.

You’ll notice that the agent in this line of reasoning is not updating on the fact that they’re holding the letter. They’re not saying, “Given that I know that I received the letter and that the universe is consistent…”

考虑到这一点的一种方法是想象代理人还不确定他们是否处于矛盾的宇宙中。他们的举动可能是一个没有白蚁的世界,他们收到了这封信。在这些世界中,通过拒绝付款,它们使他们居住的世界不一致 - 从而使这种情况永远不存在。

这是正确的推理!因为当预测变量做出预测时,他们将可视化代理没有白蚁并收到字母的场景,以找出代理商会做什么。当预测变量观察到代理人会使宇宙矛盾(拒绝付款)时,他们只有在您拥有白蚁时才束缚(受其自身承诺,并通过其准确性作为预测者的准确性)。5

You’ll never find yourself in a contradictory situation in the real world, but when an accurate predictor is trying to figure out what you’ll do, they don’t yet know which situations are contradictory. They’ll therefore imagine you in situations that may or may not turn out to be contradictory (like “letter+臭名昭著”)。你们是否would在那些情况下,矛盾决定了预测指标will实际上对你行事。

The real world is never contradictory, but predictions about you can certainly place you in contradictory hypotheticals. In cases where you want to force a certain hypothetical world to imply a contradiction, you have to be the sort of person whowouldforce the contradiction if given the opportunity.

或正如我想说的那样 - 强迫矛盾永远不会作品, but it always会奏效的, which is sufficient.

一种nonymous:The FDT algorithm is bestex ante。but if what you care about is your utility in your own life flowing after you, and not that of other instantiations, then upon hearing this news about FDT you should do whatever is best for you given that information and your beliefs, as per CDT.

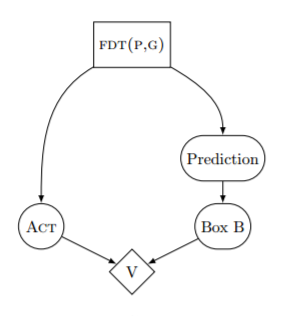

一个因果图Newcomb’s problem对于FDT代理。6

内特:我f you have the ability to commit yourself to future behaviors (and actually stick to that), it’s clearly in your interest to commit now to behaving like FDT on all decision problems that begin in your future. I, for instance, have made this commitment myself. I’ve also made stronger commitments about decision problems that began in my past, but all CDT agents should agree in principle on problems that begin in the future.7

我确实相信,即使在这些处方相当违反直觉的情况下,像您和我这样的现实世界中的人实际上也可以遵循FDT的处方。

Consider a variant ofNewcomb’s problem在两个框都是透明的地方,因此您可以在选择是否到两个框之前查看Box B是否已满。在这种情况下,EDT与CDT进行了两箱加入,因为单箱不再能够为代理商提供有关其命运的好消息。但是FDT代理仍然单箱,出于同样的原因,他们在Newcomb的原始问题中单箱并在囚犯的困境中合作:他们想象他们控制了所有决策程序的算法,包括pastcopy in the mind of their predictor.

现在,假设您站在透明Newcom问题中的两个完整盒子前。您可能会对自己说:“我希望我可以事先承诺,但是现在选择在我面前,额外的$ 1000的拖船太强大了”,然后决定您实际上没有能力做出约束力的预定。这可以;规范上正确的决策理论可能并不是所有人类都具有在现实生活中遵循的意愿,就像正确的道德理论可能是某些人缺乏遵循的意愿一样。8

就是说,我believe that I’m quite capable of just acting like I committed to act. I don’t feel a need to go through any particular精神仪式in order to feel comfortable one-boxing. I can justdecide to one-box让事情休息。

我want to be the kind of agent that sees two full boxes, so that I can walk away rich. I care more about doing what works, and about achieving practical real-world goals, than I care about the intuitiveness of my local decisions. And in this decision problem, FDT agents are the only agents that walk away rich.

的一种方式理解这种推理is that evolution graced me with a “just do what you promised to do” module. The same style of reasoning that allows me to actually follow through and one-box in Newcomb’s problem is the one that allows me to cooperate in prisoner’s dilemmas against myself — including dilemmas like “should I stick to my New Year’s resolution?”9我声称,只有被误导的CDT哲学家(错误地)说“理性”的代理人不允许使用该演化赋予的“只需遵循您的诺言”模块。

一种nonymous:最后一点:我不知道反应,但是功能相似性的理论似乎取决于算法的细节。

E.g., we could have a model where their output is stochastic, but some parameters of that process are the same (such as expected value), and the action is stochastically drawn from some distribution with those parameter values. We could have a version of that, but where the parameter values depend on private information picked up since the algorithms split, in which case each agent would have to model the distribution of private info the other might have.

这似乎很普遍;那样有用吗?是否存在无法使用该公式表达的功能相似性?

内特:只要基础分配可以是任意的图灵机,我认为这足够笼统。

实际上,这里有一些非明显的技术障碍。即,如果代理人一种基于他们的信念从他们的代理人模型中b,谁将自己的信念从代理人的模型中一种,那么您可以得到一些奇怪的循环。

例如,考虑匹配的便士问题:代理商一种和代理bwill each place a penny on a table; agent一种想要HH或TT,代理商b想要ht或th。确保两种代理在这样的游戏中都具有稳定的准确信念(例如,潜入无限循环)是非平凡的。

对此的技术解决方案是反射性甲骨文机器, a class of probabilistic Turing machines with access to an oracle that can probabilistically answer questions about any other machine in the class (with access to the same oracle).

The paper “Reflective Oracles: A Foundation for Classical Game Theory”展示如何做到这一点,并显示相关的固定点始终存在。(此外,在可以在古典游戏理论中表示的情况下,固定点始终与混合策略纳什平衡相对应。)

这或多或少使我们从一个说“彼此源代码的概率信息的代理人如何彼此相处稳定的信念?”并使我们获得了游戏理论的“理性常识”公理。10人们还可以将其视为该公理的理由,或者在代理和环境之间的界线变得模糊的情况下,或者在一个代理人具有显着的情况下应采取的措施,该公理的概括是有效的比其他的更多计算资源,等等。

但是,是的,当我们在Miri上研究这类问题时,我们倾向于使用模型,每个代理都将彼此建模为概率的Turing Machine,这似乎与您在这里的建议大致相符。

- CDT prescribes defection in this dilemma, on the grounds that one’s action cannotcause另一个代理人合作。FDT在像这样的新Comblike困境中的表现优于CDT,同时在其他困境中的表现也优于EDT,例如吸烟病变问题和XOR勒索。↩

- 代理商的倾向决定了他们是逃到阿勒颇还是留在大马士革,也决定了死亡对他们决定的预测。这使死亡不可避免地追求代理商,使飞行毫无意义。但是CDT代理商无法将这一事实纳入他们的决策中。↩

- 有一些相当自然的方法可以兑现谋杀病变,其中CDT接受了这个问题,而FDT迫使矛盾,但我们决定不深入研究该论文中的解释。

切线时,我会注意到,CDT最常见的防御能力之一类似地将某些困境对CDT“不公平”的想法转向。例如,大卫·刘易斯(David Lewis)的“Why Ain’cha Rich?”

我t’s obviously possible to define decision problems that are “unfair” in the sense that they just reward or punish agents for having a certain decision theory. We can imagine a dilemma where a predictor simply guesses whether you’re implementing FDT, and gives you $1,000,000 if so. Since we can construct symmetric dilemmas that instead reward CDT agents, EDT agents, etc., these dilemmas aren’t very interesting, and can’t help us choose between theories.

Dilemmas like Newcomb’s problem and Death in Damascus, however, don’t evaluate agents based on theirdecision theories。They evaluate agents based on their动作,决策理论的任务是确定哪种动作是最好的。我f it’s unfair to criticize CDT for making the wrong choice in problems like this, then it’s hard to see on what grounds we can criticize any agent for making a wrong choice in any problem, since one can always claim that one is merely at the mercy of one’s decision theory.↩

- Our paper describes the XOR blackmail problem like so:

一种n agent has been alerted to a rumor that her house has a terrible termite infestation, which would cost her $1,000,000 in damages. She doesn’t know whether this rumor is true. A greedy and accurate predictor with a strong reputation for honesty has learned whether or not it’s true, and drafts a letter:

“I know whether or not you have termites, and I have sent you this letter iff exactly one of the following is true: (i) the rumor is false, and you are going to pay me $1,000 upon receiving this letter; or (ii) the rumor is true, and you will not pay me upon receiving this letter.”

The predictor then predicts what the agent would do upon receiving the letter, and sends the agent the letter iff exactly one of (i) or (ii) is true. Thus, the claim made by the letter is true. Assume the agent receives the letter. Should she pay up?

我n this scenario, EDT pays the blackmailer, while CDT and FDT refuse to pay. See the “大马士革作弊死亡”纸张以获取更多详细信息。↩

- 本·莱文斯坦(Ben Levinstein)指出,这可以与游戏理论中的落后归纳相提并论,并具有理性的常识。您假设您处于某个最终决定节点,如果玩家实际上并不合理,您只能(事实证明)。↩

- FDT agents intervene on their decision function, “FDT(P,G)”. The CDT version replaces this node with “Predisposition” and instead intervenes on “Act”.↩

- Specifically, the CDT-endorsed response here is: “Well, I’ll commit to acting like an FDT agent on future problems, but in one-shot prisoner’s dilemmas that began in my past, I’ll still defect against copies of myself”.

The problem with this response is that it can cost you arbitrary amounts of utility, provided a clever blackmailer wishes to take advantage. Consider the retrocausal blackmail dilemma in “Toward Idealized Decision Theory”:

There is a wealthy intelligent system and an honest AI researcher with access to the agent’s original source code. The researcher may deploy a virus that will cause $150 million each in damages to both the AI system and the researcher, and which may only be deactivated if the agent pays the researcher $100 million. The researcher is risk-averse and only deploys the virus upon becoming confident that the agent will pay up. The agent knows the situation and has an opportunity to self-modify after the researcher acquires its original source code but before the researcher decides whether or not to deploy the virus. (The researcher knows this, and has to factor this into their prediction.)

CDT支付了倒计时勒索,即使它有机会预先参加其他情况。FDT(无论如何在任何情况下都不需要预订机制)拒绝支付。我列举了这种结果的直觉不希望,认为应该完全遵循FDT,而不是遵循CDT的处方,即在将来的困境中只能以FDT的方式行事。

The argument above must be made from a pre-theoretic vantage point, because CDT is internally consistent. There is no argument one could give to a真的CDT agent that would cause it to want to use anything other than CDT in decision problems that began in its past.

如果像倒计时勒索这样的例子具有力量(超出其他论点的FDT力量),那是因为人类不是真正的CDT代理。我们可能会根据其理论和实用美德认可CDT,但是如果我们发现CDT中有足够严重的缺陷,CDT的案例是不诚实的,在CDT中,“缺陷”相对于更基本的直觉是好事或坏事的更基本直觉。FDT比CDT和EDT的优势 - 诸如其更大的理论简单性和一般性及其在标准困境中更大效用的属性属性 - 从不确定性的位置对哪种决策理论是正确的。↩

- 原则上,甚至可以证明,按照正确的决策理论的规定完全不可能。没有逻辑法则说,规范上正确的决策行为必须与任意大脑设计(包括人脑设计)兼容。我不会敢打赌,但是在这种情况下,学习正确的理论仍然具有实用性导入,因为我们仍然可以构建AI系统来遵循规范上正确的理论。亚博体育苹果app官方下载↩

- 新年的决议要求我反复遵循我从长远来看我关心的承诺,但现在宁愿忽略,可以将其模仿为一次性双人囚犯的困境。在这种情况下,困境在时间上扩展了,我的“双胞胎”是我自己的未来自我,我或多或少地知道理性的方式。

可以想象,我今天可以减去饮食(“缺陷”),让我的未来自我抓住我的懈怠并坚持饮食(“合作”),但是在实践中,如果我是kind of agentwho isn’t willing today to sacrifice short-term comfort for long-term well-being, then I presumably won’t be that kind of agent tomorrow either, or the day after.

Seeing that this is so, and lacking a way to force themselves or their future selves to follow through, CDT agents despair of promise-keeping and abandon their resolutions. FDT agents, seeing the same set of facts, do just the opposite: they resolve to cooperate today, knowing that their future selves will reason symmetrically and do the same.↩

- The paper above shows how to use reflective oracles with CDT as opposed to FDT, because (a) one battle at a time and (b) we don’t yet have a generic algorithm for computing logical counterfactuals, but we do have a generic algorithm for doing CDT-type reasoning.↩

Did you like this post?你可能会喜欢我们的另一个Conversations帖子,包括: